publications

Publications in reversed chronological order

2025

- Neuron

Competitive integration of time and reward explains value-sensitive foraging decisions and frontal cortex ramping dynamicsMichael Bukwich, Malcolm G. Campbell, David Zoltowski, Lyle Kingsbury, Momchil S. Tomov, Joshua Stern, HyungGoo R. Kim, Jan Drugowitsch, and 2 more authorsNeuron, Oct 2025

Competitive integration of time and reward explains value-sensitive foraging decisions and frontal cortex ramping dynamicsMichael Bukwich, Malcolm G. Campbell, David Zoltowski, Lyle Kingsbury, Momchil S. Tomov, Joshua Stern, HyungGoo R. Kim, Jan Drugowitsch, and 2 more authorsNeuron, Oct 2025Patch foraging is a ubiquitous decision-making process in which animals decide when to abandon a resource patch of diminishing value to pursue an alternative. We developed a virtual foraging task in which mouse behavior varied systematically with patch value. Behavior could be explained by models integrating time and rewards antagonistically, scaled by a slowly varying latent patience state. Describing a mechanism rather than a normative prescription, these models quantitatively captured deviations from optimal foraging theory. Neuropixels recordings throughout frontal areas revealed distributed ramping signals, concentrated in the frontal cortex, from which multiple integrator models? decision variables could be decoded equally well. These signals reflected key aspects of decision models: they ramped gradually, responded oppositely to time and rewards, were sensitive to patch richness, and retained memory of reward history. Together, these results identify integration via frontal cortex ramping dynamics as a candidate mechanism for solving patch-foraging problems.

@article{Bukwich2025, author = {Bukwich, Michael and Campbell, Malcolm G. and Zoltowski, David and Kingsbury, Lyle and Tomov, Momchil S. and Stern, Joshua and Kim, HyungGoo R. and Drugowitsch, Jan and Linderman, Scott W. and Uchida, Naoshige}, title = {Competitive integration of time and reward explains value-sensitive foraging decisions and frontal cortex ramping dynamics}, year = {2025}, month = oct, day = {15}, doi = {10.1016/j.neuron.2025.07.008}, volume = {113}, issue = {20}, pages = {3458--3475.e12}, url = {https://doi.org/10.1016/j.neuron.2025.07.008}, journal = {Neuron}, publisher = {Elsevier}, } - bioRxiv

Distributed control circuits across a brain-and-cord connectomeAlexander Shakeel Bates, Jasper S Phelps, Minsu Kim, Helen H Yang, Arie Matsliah, Zaki Ajabi, Eric Perlman, Kevin M Delgado, and 84 more authorsbioRxiv, Oct 2025

Distributed control circuits across a brain-and-cord connectomeAlexander Shakeel Bates, Jasper S Phelps, Minsu Kim, Helen H Yang, Arie Matsliah, Zaki Ajabi, Eric Perlman, Kevin M Delgado, and 84 more authorsbioRxiv, Oct 2025Just as genomes revolutionized molecular genetics, connectomes (maps of neurons and synapses) are transforming neuroscience. To date, the only species with complete connectomes are worms and sea squirts (103-104 synapses). By contrast, the fruit fly is more complex (108 synaptic connections), with a brain that supports learning and spatial memory and an intricate ventral nerve cord analogous to the vertebrate spinal cord. Here we report the first adult fly connectome that unites the brain and ventral nerve cord, and we leverage this resource to investigate principles of neural control. We show that effector cells (motor neurons, endocrine cells and efferent neurons targeting the viscera) are primarily influenced by local sensory cells in the same body part, forming local feedback loops. These local loops are linked by long-range circuits involving ascending and descending neurons organized into behavior-centric modules. Single ascending and descending neurons are often positioned to influence the voluntary movement of multiple body parts, together with endocrine cells or visceral organs that support those movements. Brain regions involved in learning and navigation supervise these circuits. These results reveal an architecture that is distributed, parallelized and embodied (tightly connected to effectors), reminiscent of distributed control architectures in engineered systems

@article{Bates2025, author = {Bates, Alexander Shakeel and Phelps, Jasper S and Kim, Minsu and Yang, Helen H and Matsliah, Arie and Ajabi, Zaki and Perlman, Eric and Delgado, Kevin M and Osman, Mohammed Abdal Monium and Salmon, Christopher K and Gager, Jay and Silverman, Benjamin and Renauld, Sophia and Collie, Matthew F and Fan, Jingxuan and Pacheco, Diego A and Zhao, Yunzhi and Patel, Janki and Zhang, Wenyi and Serratosa Capdevilla, Laia and Roberts, Ruairi JV and Munnelly, Eva J and Griggs, Nina and Langley, Helen and Moya-Llamas, Borja and Maloney, Ryan T and Yu, Szi-chieh and Sterling, Amy R and Sorek, Marissa and Kruk, Krzysztof and Serafetinidis, Nikitas and Dhawan, Serene and Stuerner, Tomke and Klemm, Finja and Brooks, Paul and Lesser, Ellen and Jones, Jessica M and Pierce-Lundgren, Sara E and Lee, Su-Yee and Luo, Yichen and Cook, Andrew P and McKim, Theresa H and Kophs, Emily C and Falt, Tjalda and Negron Morales, Alexa M and Burke, Austin and Hebditch, James and Willie, Kyle P and Willie, Ryan and Popovych, Sergiy and Kemnitz, Nico and Ih, Dodam and Lee, Kisuk and Lu, Ran and Halageri, Akhilesh and Bae, J. Alexander and Jourdan, Ben and Schwartzman, Gregory and Demarest, Damian D and Behnke, Emily and Bland, Doug and Kristiansen, Anne and Skelton, Jaime and Stocks, Tom and Garner, Dustin and Salman, Farzaan and Daly, Kevin C and Hernandez, Anthony and Kumar, Sandeep and Consortium, The BANC-FlyWire and Dorkenwald, Sven and Collman, Forrest and Suver, Marie P and Fenk, Lisa M and Pankratz, Michael J and Jefferis, Gregory SXE and Eichler, Katharina and Seeds, Andrew M and Hampel, Stefanie and Agrawal, Sweta and Zandawala, Meet and Macrina, Thomas and Adjavon, Diane-Yayra and Funke, Jan and Tuthill, John C and Azevedo, Anthony and Seung, H. Sebastian and de Bivort, Benjamin L and Murthy, Mala and Drugowitsch, Jan and Wilson, Rachel I and Lee, Wei-Chung Allen}, title = {Distributed control circuits across a brain-and-cord connectome}, elocation-id = {2025.07.31.667571}, year = {2025}, doi = {10.1101/2025.07.31.667571}, publisher = {Cold Spring Harbor Laboratory}, url = {https://www.biorxiv.org/content/early/2025/08/01/2025.07.31.667571}, journal = {bioRxiv}, } - bioRxiv

A unifying theory of receptive field heterogeneity predicts hippocampal spatial tuningZach Cohen, and Jan DrugowitschbioRxiv, Oct 2025

A unifying theory of receptive field heterogeneity predicts hippocampal spatial tuningZach Cohen, and Jan DrugowitschbioRxiv, Oct 2025Neural populations exhibit receptive fields that vary in their sizes and shapes. Despite the prevalence of such tuning heterogeneity, we lack a unified theory of its computational benefits. Here, we present a framework that unifies and extends previous theories, finding that receptive field heterogeneity generally increases the information encoded in population activity. The information gain depends on heterogeneity in receptive field size, shape, and on the dimensionality of the encoded quantity. For populations encoding two-dimensional quantities, such as place cells encoding allocentric spatial position, our theory predicts that both size and shape receptive field heterogeneity are necessary to induce information gain, whereas size heterogeneity alone is insufficient. We thus turned to CA1 hippocampal activity to test our theoretical predictions - in particular, to measure shape heterogeneity, which has previously received little attention. To overcome limitations of traditional methods for estimating place cell tuning, we developed a fully probabilistic approach for measuring size and shape heterogeneity, in which tuning estimates were strategically weighted by explicitly measured uncertainty arising from biased or incomplete traversals of the environment. Our method furnished evidence that hippocampal receptive fields indeed exhibit strong degrees of size and shape heterogeneity, abiding by the normative predictions of our theory. Overall, our work makes novel predictions about the relative benefits of receptive field heterogeneities beyond our application to place cells, and provides a principled technique for testing them.Competing Interest StatementThe authors have declared no competing interest.National Institutes of Health, https://ror.org/01cwqze88, U19NS118246, R01NS116753Kempner Institute, Graduate fellowship

@article{Cohen2025, author = {Cohen, Zach and Drugowitsch, Jan}, title = {A unifying theory of receptive field heterogeneity predicts hippocampal spatial tuning}, elocation-id = {2025.07.26.666958}, year = {2025}, doi = {10.1101/2025.07.26.666958}, publisher = {Cold Spring Harbor Laboratory}, url = {https://www.biorxiv.org/content/early/2025/07/31/2025.07.26.666958}, journal = {bioRxiv}, } - NatNeuro

Multimodal cue integration and learning in a neural representation of head directionMelanie A. Basnak, Anna Kutschireiter, Tatsuo S. Okubo, Albert Chen, Pavel Gorelik, Jan Drugowitsch, and Rachel I. WilsonNature Neuroscience, Oct 2025

Multimodal cue integration and learning in a neural representation of head directionMelanie A. Basnak, Anna Kutschireiter, Tatsuo S. Okubo, Albert Chen, Pavel Gorelik, Jan Drugowitsch, and Rachel I. WilsonNature Neuroscience, Oct 2025Navigation requires us to take account of multiple spatial cues with varying levels of informativeness and learn their spatial relationships. Here we investigate this process in the Drosophila head direction system, which functions as a ring attractor and a topographic map of head direction. Using population calcium imaging and multimodal virtual reality environments, we show that increasing cue informativeness improves encoding accuracy and produces a narrower and higher bump of activity. When cues conflict, the more informative cue exerts more weight. A familiar cue is weighted more heavily and used to guide the remapping of a less familiar cue. When a cue is less informative, it is remapped more readily in response to cue conflict. All these results can be explained by an attractor model with plastic sensory synapses. Our findings provide a mechanistic explanation for how the brain assembles spatial representations through inference and learning.

@article{Basnak2025, author = {Basnak, Melanie A. and Kutschireiter, Anna and Okubo, Tatsuo S. and Chen, Albert and Gorelik, Pavel and Drugowitsch, Jan and Wilson, Rachel I.}, title = {Multimodal cue integration and learning in a neural representation of head direction}, journal = {Nature Neuroscience}, issn = {1546-1726}, year = {2025}, volume = {28}, pages = {1729-1740}, url = {https://doi.org/10.1038/s41593-024-01823-z}, doi = {10.1038/s41593-024-01823-z}, } - Annurev-vision

Hierarchical Vector Analysis of Visual Motion PerceptionSamuel J. Gershman, Johannes Bill, and Jan DrugowitschAnnual Review of Vision Science, Oct 2025

Hierarchical Vector Analysis of Visual Motion PerceptionSamuel J. Gershman, Johannes Bill, and Jan DrugowitschAnnual Review of Vision Science, Oct 2025Visual scenes are often populated by densely layered and complex patterns of motion. The problem of motion parsing is to break down these patterns into simpler components that are meaningful for perception and action. Psychophysical evidence suggests that the brain decomposes motion patterns into a hierarchy of relative motion vectors. Recent computational models have shed light on the algorithmic and neural basis of this parsing strategy. We review these models and the experiments that were designed to test their predictions. Zooming out, we argue that hierarchical motion perception is a tractable model system for understanding how aspects of high-level cognition such as compositionality may be implemented in neural circuitry.

@article{Gershman2025, author = {Gershman, Samuel J. and Bill, Johannes and Drugowitsch, Jan}, title = {Hierarchical Vector Analysis of Visual Motion Perception}, journal = {Annual Review of Vision Science}, issn = {2374-4642}, year = {2025}, publisher = {Annual Reviews}, url = {https://www.annualreviews.org/content/journals/10.1146/annurev-vision-110323-031344}, doi = {10.1146/annurev-vision-110323-031344}, pages = {411-422}, } - Nature

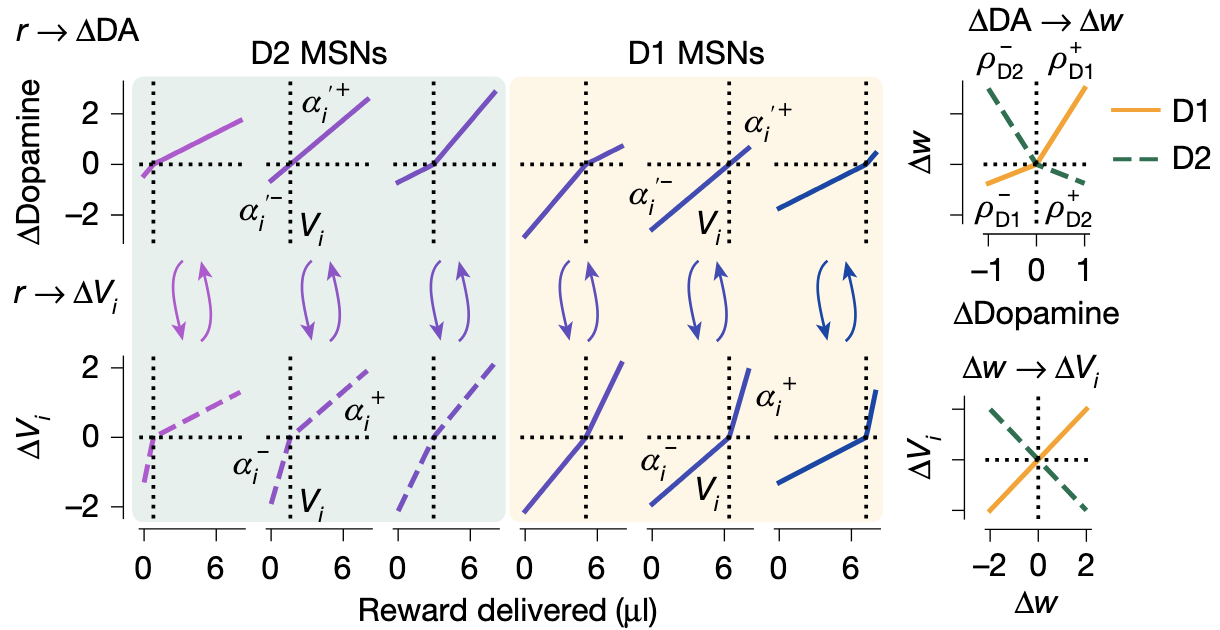

An opponent striatal circuit for distributional reinforcement learningAdam S. Lowet, Qiao Zheng, Melissa Meng, Sata Matias, Drugowitsch Jan, and Naoshige UchidaNature, Oct 2025

An opponent striatal circuit for distributional reinforcement learningAdam S. Lowet, Qiao Zheng, Melissa Meng, Sata Matias, Drugowitsch Jan, and Naoshige UchidaNature, Oct 2025Machine learning research has achieved large performance gains on a wide range of tasks by expanding the learning target from mean rewards to entire probability distributions of rewards—an approach known as distributional reinforcement learning (RL). The mesolimbic dopamine system is thought to underlie RL in the mammalian brain by updating a representation of mean value in the striatum2, but little is known about whether, where and how neurons in this circuit encode information about higher-order moments of reward distributions3. Here, to fill this gap, we used high-density probes (Neuropixels) to record striatal activity from mice performing a classical conditioning task in which reward mean, reward variance and stimulus identity were independently manipulated. In contrast to traditional RL accounts, we found robust evidence for abstract encoding of variance in the striatum. Chronic ablation of dopamine inputs disorganized these distributional representations in the striatum without interfering with mean value coding. Two-photon calcium imaging and optogenetics revealed that the two major classes of striatal medium spiny neurons—D1 and D2—contributed to this code by preferentially encoding the right and left tails of the reward distribution, respectively. We synthesize these findings into a new model of the striatum and mesolimbic dopamine that harnesses the opponency between D1 and D2 medium spiny neurons4–9 to reap the computational benefits of distributional RL.

@article{Lowet2025, title = {An opponent striatal circuit for distributional reinforcement learning}, journal = {Nature}, year = {2025}, volume = {639}, pages = {717-726}, doi = {10.1038/s41586-024-08488-5}, url = {https://doi.org/10.1038/s41586-024-08488-5}, author = {Lowet, Adam S. and Zheng, Qiao and Meng, Melissa and Matias, Sata and Jan, Drugowitsch and Uchida, Naoshige}, }

2024

- OSF

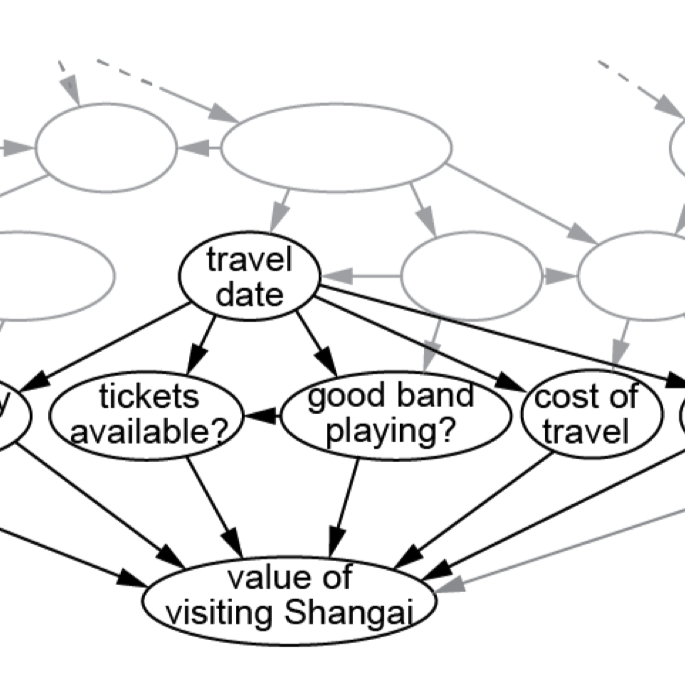

Major sources of computational complexity in complex decision-makingJan Drugowitsch, and Alexandre PougetNov 2024

Major sources of computational complexity in complex decision-makingJan Drugowitsch, and Alexandre PougetNov 2024What makes decision-making hard, and what determines the time it takes to make a decision? For simple decisions, the standard answer implicates neuronal noise: its presence makes decisions hard, and averaging it out comes at the cost of long reaction times. We argue that this explanation is unlikely to hold for complex decisions. Instead, complex decisions are constrained by two main factors: memory retrieval and value computation. Indeed, most decisions require retrieving relevant information from memory, and use it to compute the choice options’ values. For the large memories of vertebrate brains, both operations can be extremely complex even if the neural circuits implementing them are perfectly noiseless. Yet, the importance of these factors has not yet been fully recognized in systems neuroscience, which tends to focus on tasks in which values are retrieved from simple noisy look-up tables. The interrogation of more complex and realistic tasks, similar to the ones used in human research, might help bridge this gap.

@misc{DrugowitschPouget2024, title = {Major sources of computational complexity in complex decision-making}, url = {osf.io/vw4yr}, doi = {10.31219/osf.io/vw4yr}, publisher = {OSF Preprints}, author = {Drugowitsch, Jan and Pouget, Alexandre}, year = {2024}, month = nov, } - CellReports

Nerve injury disrupts temporal processing in the spinal cord dorsal horn through alterations in PV+ interneuronsGenelle Rankin, Anda M. Chirila, Alan J. Emanuel, Zihe Zhang, Clifford J. Woolf, Jan Drugowitsch, and David D. GintyCell Reports, Nov 2024

Nerve injury disrupts temporal processing in the spinal cord dorsal horn through alterations in PV+ interneuronsGenelle Rankin, Anda M. Chirila, Alan J. Emanuel, Zihe Zhang, Clifford J. Woolf, Jan Drugowitsch, and David D. GintyCell Reports, Nov 2024How mechanical allodynia following nerve injury is encoded in patterns of neural activity in the spinal cord dorsal horn (DH) remains incompletely understood. We address this in mice using the spared nerve injury model of neuropathic pain and in vivo electrophysiological recordings. Surprisingly, despite dramatic behavioral over-reactivity to mechanical stimuli following nerve injury, an overall increase in sensitivity or reactivity of DH neurons is not observed. We do, however, observe a marked decrease in correlated neural firing patterns, including the synchrony of mechanical stimulus-evoked firing, across the DH. Alterations in DH temporal firing patterns are recapitulated by silencing DH parvalbumin+ (PV+) interneurons, previously implicated in mechanical allodynia, as are allodynic pain-like behaviors. These findings reveal decorrelated DH network activity, driven by alterations in PV+ interneurons, as a prominent feature of neuropathic pain and suggest restoration of proper temporal activity as a potential therapeutic strategy to treat chronic neuropathic pain.

@article{Rankin2024, title = {Nerve injury disrupts temporal processing in the spinal cord dorsal horn through alterations in PV+ interneurons}, journal = {Cell Reports}, volume = {43}, number = {2}, pages = {113718}, year = {2024}, issn = {2211-1247}, doi = {10.1016/j.celrep.2024.113718}, url = {https://www.sciencedirect.com/science/article/pii/S2211124724000469}, author = {Rankin, Genelle and Chirila, Anda M. and Emanuel, Alan J. and Zhang, Zihe and Woolf, Clifford J. and Drugowitsch, Jan and Ginty, David D.}, keywords = {spinal cord dorsal horn, mechanical allodynia, dorsal horn inhibition, in vivo electrophysiology, dorsal horn network activity}, }

2023

- PhilTransRSocB

Causal inference during closed-loop navigation: parsing of self- and object-motionJean-Paul Noel, Johanes Bill, Haoran Ding, John Vastola, Gregory C DeAngelis, Dora Angelaki, and Jan DrugowitschPhilosophical Transactions of the Royal Society B, Aug 2023

Causal inference during closed-loop navigation: parsing of self- and object-motionJean-Paul Noel, Johanes Bill, Haoran Ding, John Vastola, Gregory C DeAngelis, Dora Angelaki, and Jan DrugowitschPhilosophical Transactions of the Royal Society B, Aug 2023A key computation in building adaptive internal models of the external world is to ascribe sensory signals to their likely cause(s), a process of causal inference (CI). CI is well studied within the framework of two-alternative forced-choice tasks, but less well understood within the cadre of naturalistic action–perception loops. Here, we examine the process of disambiguating retinal motion caused by self- and/or object-motion during closed-loop navigation. First, we derive a normative account specifying how observers ought to intercept hidden and moving targets given their belief about (i) whether retinal motion was caused by the target moving, and (ii) if so, with what velocity. Next, in line with the modelling results, we show that humans report targets as stationary and steer towards their initial rather than final position more often when they are themselves moving, suggesting a putative misattribution of object-motion to the self. Further, we predict that observers should misattribute retinal motion more often: (i) during passive rather than active self-motion (given the lack of an efference copy informing self-motion estimates in the former), and (ii) when targets are presented eccentrically rather than centrally (given that lateral self-motion flow vectors are larger at eccentric locations during forward self-motion). Results support both of these predictions. Lastly, analysis of eye movements show that, while initial saccades toward targets were largely accurate regardless of the self-motion condition, subsequent gaze pursuit was modulated by target velocity during object-only motion, but not during concurrent object- and self-motion. These results demonstrate CI within action–perception loops, and suggest a protracted temporal unfolding of the computations characterizing CI.

@article{philtransrsocb2023, title = {Causal inference during closed-loop navigation: parsing of self- and object-motion}, author = {Noel, Jean-Paul and Bill, Johanes and Ding, Haoran and Vastola, John and DeAngelis, Gregory C and Angelaki, Dora and Drugowitsch, Jan}, journal = {Philosophical Transactions of the Royal Society B}, volume = {378}, issue = {1886}, pages = {20220344}, year = {2023}, month = aug, doi = {10.1098/rstb.2022.0344}, url = {https://doi.org/10.1098/rstb.2022.0344}, } - CerebCortex

Diverse effects of gaze direction on heading perception in humansWei Gao, Yipeng Lin, Jiangrong Shen, Jianing Han, Xiaoxiao Song, Yukun Lu, Huijia Zhan, Qianbing Li, and 6 more authorsCerebral Cortex, Feb 2023

Diverse effects of gaze direction on heading perception in humansWei Gao, Yipeng Lin, Jiangrong Shen, Jianing Han, Xiaoxiao Song, Yukun Lu, Huijia Zhan, Qianbing Li, and 6 more authorsCerebral Cortex, Feb 2023Gaze change can misalign spatial reference frames encoding visual and vestibular signals in cortex, which may affect the heading discrimination. Here, by systematically manipulating the eye-in-head and head-on-body positions to change the gaze direction of subjects, the performance of heading discrimination was tested with visual, vestibular, and combined stimuli in a reaction-time task in which the reaction time is under the control of subjects. We found the gaze change induced substantial biases in perceived heading, increased the threshold of discrimination and reaction time of subjects in all stimulus conditions. For the visual stimulus, the gaze effects were induced by changing the eye-in-world position, and the perceived heading was biased in the opposite direction of gaze. In contrast, the vestibular gaze effects were induced by changing the eye-in-head position, and the perceived heading was biased in the same direction of gaze. Although the bias was reduced when the visual and vestibular stimuli were combined, integration of the 2 signals substantially deviated from predictions of an extended diffusion model that accumulates evidence optimally over time and across sensory modalities. These findings reveal diverse gaze effects on the heading discrimination and emphasize that the transformation of spatial reference frames may underlie the effects.

@article{cerebcortex2023, author = {Gao, Wei and Lin, Yipeng and Shen, Jiangrong and Han, Jianing and Song, Xiaoxiao and Lu, Yukun and Zhan, Huijia and Li, Qianbing and Ge, Haoting and Lin, Zheng and Shi, Wenlei and Drugowitsch, Jan and Tang, Huajin and Chen, Xiaodong}, title = {{Diverse effects of gaze direction on heading perception in humans}}, journal = {Cerebral Cortex}, volume = {33}, number = {11}, pages = {6772-6784}, year = {2023}, month = feb, issn = {1047-3211}, doi = {10.1093/cercor/bhac541}, url = {https://doi.org/10.1093/cercor/bhac541}, } - PNAS

Bayesian inference in ring attractor networksAnna Kutschireiter, Melanie A Basnak, Rachel I. Wilson, and Jan DrugowitschProceedings of the National Academy of Sciences, Feb 2023

Bayesian inference in ring attractor networksAnna Kutschireiter, Melanie A Basnak, Rachel I. Wilson, and Jan DrugowitschProceedings of the National Academy of Sciences, Feb 2023Working memories are thought to be held in attractor networks in the brain. These attractors should keep track of the uncertainty associated with each memory, so as to weigh it properly against conflicting new evidence. However, conventional attractors do not represent uncertainty. Here, we show how uncertainty could be incorporated into an attractor, specifically a ring attractor that encodes head direction. First, we introduce a rigorous normative framework (the circular Kalman filter) for benchmarking the performance of a ring attractor under conditions of uncertainty. Next, we show that the recurrent connections within a conventional ring attractor can be retuned to match this benchmark. This allows the amplitude of network activity to grow in response to confirmatory evidence, while shrinking in response to poor-quality or strongly conflicting evidence. This “Bayesian ring attractor” performs near-optimal angular path integration and evidence accumulation. Indeed, we show that a Bayesian ring attractor is consistently more accurate than a conventional ring attractor. Moreover, near-optimal performance can be achieved without exact tuning of the network connections. Finally, we use large-scale connectome data to show that the network can achieve near-optimal performance even after we incorporate biological constraints. Our work demonstrates how attractors can implement a dynamic Bayesian inference algorithm in a biologically plausible manner, and it makes testable predictions with direct relevance to the head direction system as well as any neural system that tracks direction, orientation, or periodic rhythms.

@article{pnas2023, title = {Bayesian inference in ring attractor networks}, author = {Kutschireiter, Anna and Basnak, Melanie A and Wilson, Rachel I. and Drugowitsch, Jan}, journal = {Proceedings of the National Academy of Sciences}, volume = {120}, issue = {9}, pages = {e2210622120}, year = {2023}, month = feb, doi = {10.1073/pnas.2210622120}, url = {https://doi.org/10.1073/pnas.2210622120}, } - PMLR

Is the information geometry of probabilistic population codes learnable?John J. Vastola, Zach Cohen, and Jan DrugowitschIn Proceedings of the 1st NeurIPS Workshop on Symmetry and Geometry in Neural Representations, 03 dec 2023

Is the information geometry of probabilistic population codes learnable?John J. Vastola, Zach Cohen, and Jan DrugowitschIn Proceedings of the 1st NeurIPS Workshop on Symmetry and Geometry in Neural Representations, 03 dec 2023One reason learning the geometry of latent neural manifolds from neural activity data is difficult is that the ground truth is generally not known, which can make manifold learning methods hard to evaluate. Probabilistic population codes (PPCs), a class of biologically plausible and self-consistent models of neural populations that encode parametric probability distributions, may offer a theoretical setting where it is possible to rigorously study manifold learning. It is natural to define the neural manifold of a PPC as the statistical manifold of the encoded distribution, and we derive a mathematical result that the information geometry of the statistical manifold is directly related to measurable covariance matrices. This suggests a simple but rigorously justified decoding strategy based on principal component analysis, which we illustrate using an analytically tractable PPC.

2022

- NatHumBeh

Efficient stabilization of imprecise statistical inference through conditional belief updatingJulie Drevet, Jan Drugowitsch, and Valentin WyartNature Human Behaviour, Dec 2022

Efficient stabilization of imprecise statistical inference through conditional belief updatingJulie Drevet, Jan Drugowitsch, and Valentin WyartNature Human Behaviour, Dec 2022Statistical inference is the optimal process for forming and maintaining accurate beliefs about uncertain environments. However, human inference comes with costs due to its associated biases and limited precision. Indeed, biased or imprecise inference can trigger variable beliefs and unwarranted changes in behaviour. Here, by studying decisions in a sequential categorization task based on noisy visual stimuli, we obtained converging evidence that humans reduce the variability of their beliefs by updating them only when the reliability of incoming sensory information is judged as sufficiently strong. Instead of integrating the evidence provided by all stimuli, participants actively discarded as much as a third of stimuli. This conditional belief updating strategy shows good test–retest reliability, correlates with perceptual confidence and explains human behaviour better than previously described strategies. This seemingly suboptimal strategy not only reduces the costs of imprecise computations but also, counterintuitively, increases the accuracy of resulting decisions.

@article{nathumanbehav2022, title = {Efficient stabilization of imprecise statistical inference through conditional belief updating}, author = {Drevet, Julie and Drugowitsch, Jan and Wyart, Valentin}, journal = {Nature Human Behaviour}, volume = {6}, pages = {1691--1704}, year = {2022}, month = dec, doi = {10.1038/s41562-022-01445-0}, url = {https://doi.org/10.1038/s41562-022-01445-0}, data = {https://figshare.com/projects/Efficient_stabilization_of_imprecise_statistical_inference_through_conditional_belief_updating/140170}, } - NatComms

Visual motion perception as online hierarchical inferenceJohannes Bill, Samuel J. Gershman, and Jan DrugowitschNature Communications, Dec 2022

Visual motion perception as online hierarchical inferenceJohannes Bill, Samuel J. Gershman, and Jan DrugowitschNature Communications, Dec 2022Identifying the structure of motion relations in the environment is critical for navigation, tracking, prediction, and pursuit. Yet, little is known about the mental and neural computations that allow the visual system to infer this structure online from a volatile stream of visual information. We propose online hierarchical Bayesian inference as a principled solution for how the brain might solve this complex perceptual task. We derive an online Expectation-Maximization algorithm that explains human percepts qualitatively and quantitatively for a diverse set of stimuli, covering classical psychophysics experiments, ambiguous motion scenes, and illusory motion displays. We thereby identify normative explanations for the origin of human motion structure perception and make testable predictions for future psychophysics experiments. The proposed online hierarchical inference model furthermore affords a neural network implementation which shares properties with motion-sensitive cortical areas and motivates targeted experiments to reveal the neural representations of latent structure.

@article{natcomms2022, title = {Visual motion perception as online hierarchical inference}, author = {Bill, Johannes and Gershman, Samuel J. and Drugowitsch, Jan}, journal = {Nature Communications}, volume = {13}, issue = {1}, pages = {1--17}, year = {2022}, month = dec, doi = {10.1038/s41467-022-34805-5}, url = {https://doi.org/10.1038/s41467-022-34805-5}, } - JOSE

Neuromatch Academy: a 3-week, online summer school in computational neuroscienceBernard ’t Hart, Titipat Achakulvisut, Ayoade Adeyemi, Athena Akrami, Bradly Alicea, Alicia Alonso-Andres, Diego Alzate-Correa, Arash Ash, and 154 more authorsJournal of Open Source Education, Dec 2022

Neuromatch Academy: a 3-week, online summer school in computational neuroscienceBernard ’t Hart, Titipat Achakulvisut, Ayoade Adeyemi, Athena Akrami, Bradly Alicea, Alicia Alonso-Andres, Diego Alzate-Correa, Arash Ash, and 154 more authorsJournal of Open Source Education, Dec 2022@article{jose2022, doi = {10.21105/jose.00118}, url = {https://doi.org/10.21105/jose.00118}, year = {2022}, publisher = {The Open Journal}, volume = {5}, number = {49}, pages = {118}, author = {{'t Hart}, Bernard and Achakulvisut, Titipat and Adeyemi, Ayoade and Akrami, Athena and Alicea, Bradly and Alonso-Andres, Alicia and Alzate-Correa, Diego and Ash, Arash and Ballesteros, Jesus and Balwani, Aishwarya and Batty, Eleanor and Beierholm, Ulrik and Benjamin, Ari and Bhalla, Upinder and Blohm, Gunnar and Blohm, Joachim and Bonnen, Kathryn and Brigham, Marco and Brunton, Bingni and Butler, John and Caie, Brandon and Gajic, N and Chatterjee, Sharbatanu and Chavlis, Spyridon and Chen, Ruidong and Cheng, You and Chow, H.m. and Chua, Raymond and Dai, Yunwei and David, Isaac and DeWitt, Eric and Denis, Julien and Dipani, Alish and Dorschel, Arianna and Drugowitsch, Jan and Dwivedi, Kshitij and Escola, Sean and Fan, Haoxue and Farhoodi, Roozbeh and Fei, Yicheng and Fiquet, Pierre-Étienne and Fontolan, Lorenzo and Forest, Jeremy and Fujishima, Yuki and Galbraith, Byron and Galdamez, Mario and Gao, Richard and Gjorgjieva, Julijana and Gonzalez, Alexander and Gu, Qinglong and Guo, Yueqi and Guo, Ziyi and Gupta, Pankaj and Gurbuz, Busra and Haimerl, Caroline and Harrod, Jordan and Hyafil, Alexandre and Irani, Martin and Jacobson, Daniel and Johnson, Michelle and Jones, Ilenna and Karni, Gili and Kass, Robert and Kim, Hyosub and Kist, Andreas and Koene, Randal and Kording, Konrad and Krause, Matthew and Kumar, Arvind and Kühn, Norma and Lc, Ray and Laporte, Matthew and Lee, Junseok and Li, Songting and Lin, Sikun and Lin, Yang and Liu, Shuze and Liu, Tony and Livezey, Jesse and Lu, Linlin and Macke, Jakob and Mahaffy, Kelly and Martins, A and Martorell, Nicolás and Martínez, Manolo and Mattar, Marcelo and Menendez, Jorge and Miller, Kenneth and Mineault, Patrick and Mohammadi, Nosratullah and Mohsenzadeh, Yalda and Morgenroth, Elenor and Morshedzadeh, Taha and Mosberger, Alice and Muliya, Madhuvanthi and Mur, Marieke and Murray, John and Nd, Yashas and Naud, Richard and Nayak, Prakriti and Oak, Anushka and Castillo, Itzel and Orouji, Seyedmehdi and Otero-Millan, Jorge and Pachitariu, Marius and Pandey, Biraj and Paredes, Renato and Parent, Jesse and Park, Il and Peters, Megan and Pitkow, Xaq and Poirazi, Panayiota and Popal, Haroon and Prabhakaran, Sandhya and Qiu, Tian and Ragunathan, Srinidhi and Rodriguez-Cruces, Raul and Rolnick, David and Sahoo, Ashish and Salehinajafabadi, Saeed and Savin, Cristina and Saxena, Shreya and Schrater, Paul and Schroeder, Karen and Schwarze, Alice and Sedigh-Sarvestani, Madineh and Sekhar, K and Shadmehr, Reza and Shanechi, Maryam and Sharma, Siddhant and Shea-Brown, Eric and Shenoy, Krishna and Shimabukuro, Carolina and Shuvaev, Sergey and Sin, Man and Smith, Maurice and Steinmetz, Nicholas and Stosio, Karolina and Straley, Elizabeth and Strandquist, Gabrielle and Stringer, Carsen and Tomar, Rimjhim and Tran, Ngoc and Triantafillou, Sofia and Udeigwe, Lawrence and Valeriani, Davide and Valton, Vincent and Vaziri-Pashkam, Maryam and Vincent, Peter and Vishne, Gal and Wallisch, Pascal and Wang, Peiyuan and Ward, Claire and Waskom, Michael and Wei, Kunlin and Wu, Anqi and Wu, Zhengwei and Wyble, Brad and Zhang, Lei and Zysman, Daniel and Uquillas, Federico and van Viegen, Tara}, title = {Neuromatch Academy: a 3-week, online summer school in computational neuroscience}, journal = {Journal of Open Source Education}, } - eLife

Controllability boosts neural and cognitive signatures of changes-of-mind in uncertain environmentsMarion Rouault, Aurélien Weiss, Junseok K Lee, Jan Drugowitsch, Valerian Chambon, and Valentin WyarteLife, Sep 2022

Controllability boosts neural and cognitive signatures of changes-of-mind in uncertain environmentsMarion Rouault, Aurélien Weiss, Junseok K Lee, Jan Drugowitsch, Valerian Chambon, and Valentin WyarteLife, Sep 2022In uncertain environments, seeking information about alternative choice options is essential for adaptive learning and decision-making. However, information seeking is usually confounded with changes-of-mind about the reliability of the preferred option. Here, we exploited the fact that information seeking requires control over which option to sample to isolate its behavioral and neurophysiological signatures. We found that changes-of-mind occurring with control require more evidence against the current option, are associated with reduced confidence, but are nevertheless more likely to be confirmed on the next decision. Multimodal neurophysiological recordings showed that these changes-of-mind are preceded by stronger activation of the dorsal attention network in magnetoencephalography, and followed by increased pupil-linked arousal during the presentation of decision outcomes. Together, these findings indicate that information seeking increases the saliency of evidence perceived as the direct consequence of one’s own actions.

@article{elife2022, article_type = {journal}, title = {Controllability boosts neural and cognitive signatures of changes-of-mind in uncertain environments}, author = {Rouault, Marion and Weiss, Aurélien and Lee, Junseok K and Drugowitsch, Jan and Chambon, Valerian and Wyart, Valentin}, editor = {Roiser, Jonathan and Frank, Michael J and Press, Clare}, volume = {11}, year = {2022}, month = sep, pub_date = {2022-09-13}, pages = {e75038}, citation = {eLife 2022;11:e75038}, doi = {10.7554/eLife.75038}, url = {https://doi.org/10.7554/eLife.75038}, keywords = {confidence, information seeking, exploration, inference, decision-making}, journal = {eLife}, issn = {2050-084X}, publisher = {eLife Sciences Publications, Ltd}, } - RLDM

Hierarchical structure learning for perceptual decision making in visual motion perceptionJohannes Bill, Samuel J. Gershman, and Jan DrugowitschIn The 5th Multi-disciplinary Conference on Reinforcement Learning and Decision Making (RLDM-22). Brown University, Providence, RI, USA., May 2022

Hierarchical structure learning for perceptual decision making in visual motion perceptionJohannes Bill, Samuel J. Gershman, and Jan DrugowitschIn The 5th Multi-disciplinary Conference on Reinforcement Learning and Decision Making (RLDM-22). Brown University, Providence, RI, USA., May 2022Successful behavior in the real world critically depends on discovering the latent structure behind the volatile inputs reaching our sensory system. Our brains face the online task of discovering structure at multiple timescales ranging from short-lived correlations, to the structure underlying a scene, to life-time learning of causal relations. Little is known about the mental and neural computations driving the brain’s ability of online, multi-timescale structure inference. We studied these computations by the example of visual motion perception owing to the importance of structured motion for behavior. We propose online hierarchical Bayesian inference as a principled solution for how the brain might solve multi-timescale structure inference. We derive an online Expectation-Maximization algorithm that continually updates an estimate of a visual scene’s underlying structure while using this inferred structure to organize incoming noisy velocity observations into meaningful, stable percepts. We show that the algorithm explains human percepts qualitatively and quantitatively for a diverse set of stimuli, covering classical psychophysics experiments, ambiguous motion scenes, and illusory motion displays. It explains experimental results of human motion structure classification with higher fidelity than a previous ideal observer-based model, and provides normative explanations for the origin of biased perception in motion direction repulsion experiments. To identify a scene’s structure the algorithm recruits motion components from a set of frequently occurring features, such as global translation or grouping of stimuli. We demonstrate in computer simulations how these features can be learned online from experience. Finally, the algorithm affords a neural network implementation which shares properties with motion-sensitive cortical areas MT and MSTd and motivates a novel class of neuroscientific experiments to reveal the neural representations of latent structure.

@inproceedings{rldm2022, title = {Hierarchical structure learning for perceptual decision making in visual motion perception}, author = {Bill, Johannes and Gershman, Samuel J. and Drugowitsch, Jan}, booktitle = {The 5th Multi-disciplinary Conference on Reinforcement Learning and Decision Making (RLDM-22). Brown University, Providence, RI, USA.}, year = {2022}, month = may, page = {1--4}, } - Neuron

A large majority of awake hippocampal sharp-wave ripples feature spatial trajectories with momentumEmma L. Krause, and Jan DrugowitschNeuron, May 2022

A large majority of awake hippocampal sharp-wave ripples feature spatial trajectories with momentumEmma L. Krause, and Jan DrugowitschNeuron, May 2022During periods of rest, hippocampal place cells feature bursts of activity called sharp-wave ripples (SWRs). Heuristic approaches have revealed that a small fraction of SWRs appear to “simulate” trajectories through the environment, called awake hippocampal replay. However, the functional role of a majority of these SWRs remains unclear. We find, using Bayesian model comparison of state-space models to characterize the spatiotemporal dynamics embedded in SWRs, that almost all SWRs of foraging rodents simulate such trajectories. Furthermore, these trajectories feature momentum, or inertia in their velocities, that mirrors the animals’ natural movement, in contrast to replay events during sleep, which lack such momentum. Last, we show that past analyses of replayed trajectories for navigational planning were biased by the heuristic SWR sub-selection. Our findings thus identify the dominant function of awake SWRs as simulating trajectories with momentum and provide a principled foundation for future work on their computational function.

@article{neuron2022, title = {A large majority of awake hippocampal sharp-wave ripples feature spatial trajectories with momentum}, journal = {Neuron}, volume = {110}, number = {4}, pages = {722-733.e8}, year = {2022}, issn = {0896-6273}, doi = {10.1016/j.neuron.2021.11.014}, url = {https://www.sciencedirect.com/science/article/pii/S0896627321009521}, author = {Krause, Emma L. and Drugowitsch, Jan}, keywords = {sharp-wave ripples, hippocampal replay, hippocampus, state-space models, Bayesian model comparison}, } - IEEETSP

Projection Filtering With Observed State Increments With Applications in Continuous-Time Circular FilteringAnna Kutschireiter, Luke Rast, and Jan DrugowitschIEEE Transactions on Signal Processing, Jan 2022

Projection Filtering With Observed State Increments With Applications in Continuous-Time Circular FilteringAnna Kutschireiter, Luke Rast, and Jan DrugowitschIEEE Transactions on Signal Processing, Jan 2022Angular path integration is the ability of a system to estimate its own heading direction from potentially noisy angular velocity (or increment) observations. Non-probabilistic algorithms for angular path integration, which rely on a summation of these noisy increments, do not appropriately take into account the reliability of such observations, which is essential for appropriately weighing one’s current heading direction estimate against incoming information. In a probabilistic setting, angular path integration can be formulated as a continuous-time nonlinear filtering problem (circular filtering) with observed state increments. The circular symmetry of heading direction makes this inference task inherently nonlinear, thereby precluding the use of popular inference algorithms such as Kalman filters, rendering the problem analytically inaccessible. Here, we derive an approximate solution to circular continuous-time filtering, which integrates state increment observations while maintaining a fixed representation through both state propagation and observational updates. Specifically, we extend the established projection-filtering method to account for observed state increments and apply this framework to the circular filtering problem. We further propose a generative model for continuous-time angular-valued direct observations of the hidden state, which we integrate seamlessly into the projection filter. Applying the resulting scheme to a model of probabilistic angular path integration, we derive an algorithm for circular filtering, which we term the circular Kalman filter. Importantly, this algorithm is analytically accessible, interpretable, and outperforms an alternative filter based on a Gaussian approximation.

@article{ieeetsp2022, author = {Kutschireiter, Anna and Rast, Luke and Drugowitsch, Jan}, journal = {IEEE Transactions on Signal Processing}, title = {Projection Filtering With Observed State Increments With Applications in Continuous-Time Circular Filtering}, year = {2022}, volume = {70}, number = {}, pages = {686-700}, doi = {10.1109/TSP.2022.3143471}, month = jan, url = {https://doi.org/10.1109/TSP.2022.3143471}, }

2021

- NatComms

Interacting with volatile environments stabilizes hidden-state inference and its brain signaturesAurélien Weiss, Valérian Chambon, Junseok K. Lee, Jan Drugowitsch, and Valentin WyartNature Communications, Jan 2021

Interacting with volatile environments stabilizes hidden-state inference and its brain signaturesAurélien Weiss, Valérian Chambon, Junseok K. Lee, Jan Drugowitsch, and Valentin WyartNature Communications, Jan 2021Making accurate decisions in uncertain environments requires identifying the generative cause of sensory cues, but also the expected outcomes of possible actions. Although both cognitive processes can be formalized as Bayesian inference, they are commonly studied using different experimental frameworks, making their formal comparison difficult. Here, by framing a reversal learning task either as cue-based or outcome-based inference, we found that humans perceive the same volatile environment as more stable when inferring its hidden state by interaction with uncertain outcomes than by observation of equally uncertain cues. Multivariate patterns of magnetoencephalographic (MEG) activity reflected this behavioral difference in the neural interaction between inferred beliefs and incoming evidence, an effect originating from associative regions in the temporal lobe. Together, these findings indicate that the degree of control over the sampling of volatile environments shapes human learning and decision-making under uncertainty.

@article{natcomms2021b, title = {Interacting with volatile environments stabilizes hidden-state inference and its brain signatures}, journal = {Nature Communications}, volume = {12}, number = {2228}, pages = {1--17}, year = {2021}, doi = {10.1038/s41467-021-22396-6}, url = {https://doi.org/10.1038/s41467-021-22396-6}, author = {Weiss, Aurélien and Chambon, Valérian and Lee, Junseok K. and Drugowitsch, Jan and Wyart, Valentin}, } - eLife

Optimal policy for attention-modulated decisions explains human fixation behaviorAnthony I. Jang, Ravi Sharma, and Jan DrugowitscheLife, Mar 2021

Optimal policy for attention-modulated decisions explains human fixation behaviorAnthony I. Jang, Ravi Sharma, and Jan DrugowitscheLife, Mar 2021Traditional accumulation-to-bound decision-making models assume that all choice options are processed with equal attention. In real life decisions, however, humans alternate their visual fixation between individual items to efficiently gather relevant information (Yang et al., 2016). These fixations also causally affect one’s choices, biasing them toward the longer-fixated item (Krajbich et al., 2010). We derive a normative decision-making model in which attention enhances the reliability of information, consistent with neurophysiological findings (Cohen and Maunsell, 2009). Furthermore, our model actively controls fixation changes to optimize information gathering. We show that the optimal model reproduces fixation-related choice biases seen in humans and provides a Bayesian computational rationale for this phenomenon. This insight led to additional predictions that we could confirm in human data. Finally, by varying the relative cognitive advantage conferred by attention, we show that decision performance is benefited by a balanced spread of resources between the attended and unattended items.

@article{elife2021, article_type = {journal}, title = {Optimal policy for attention-modulated decisions explains human fixation behavior}, author = {Jang, Anthony I. and Sharma, Ravi and Drugowitsch, Jan}, editor = {Tsetsos, Konstantinos and Gold, Joshua I and Tsetsos, Konstantinos}, volume = {10}, year = {2021}, month = mar, pub_date = {2021-03-26}, pages = {e63436}, citation = {eLife 2021;10:e63436}, doi = {10.7554/eLife.63436}, url = {https://doi.org/10.7554/eLife.63436}, keywords = {decision-making, visual attention, diffusion models, optimality}, journal = {eLife}, issn = {2050-084X}, publisher = {eLife Sciences Publications, Ltd}, data = {https://doi.org/10.5281/zenodo.4636831}, } - SciRep

Human visual motion perception shows hallmarks of Bayesian structural inferenceSichao Yang, Johannes Bill, Drugowitsch Jan, and Samuel J. GershmanScientific Reports, Feb 2021

Human visual motion perception shows hallmarks of Bayesian structural inferenceSichao Yang, Johannes Bill, Drugowitsch Jan, and Samuel J. GershmanScientific Reports, Feb 2021Motion relations in visual scenes carry an abundance of behaviorally relevant information, but little is known about how humans identify the structure underlying a scene’s motion in the first place. We studied the computations governing human motion structure identification in two psychophysics experiments and found that perception of motion relations showed hallmarks of Bayesian structural inference. At the heart of our research lies a tractable task design that enabled us to reveal the signatures of probabilistic reasoning about latent structure. We found that a choice model based on the task’s Bayesian ideal observer accurately matched many facets of human structural inference, including task performance, perceptual error patterns, single-trial responses, participant-specific differences, and subjective decision confidence—especially, when motion scenes were ambiguous and when object motion was hierarchically nested within other moving reference frames. Our work can guide future neuroscience experiments to reveal the neural mechanisms underlying higher-level visual motion perception.

@article{scirep2021, title = {Human visual motion perception shows hallmarks of Bayesian structural inference}, author = {Yang, Sichao and Bill, Johannes and Jan, Drugowitsch and Gershman, Samuel J.}, volume = {11}, issue = {3714}, year = {2021}, month = feb, pages = {1-14}, doi = {10.1038/s41598-021-82175-7}, url = {https://doi.org/10.1038/s41598-021-82175-7}, journal = {Scientific Reports}, data = {https://github.com/DrugowitschLab/motion-structure-identification}, } - NatComms

Scaling of sensory information in large neural populations shows signatures of information-limiting correlationsMohammadMehdi Kafashan, Anna W. Jaffe, Selmaan N. Chettih, Ramon Nogueira, Iñigo Arandia-Romero, Christopher D. Harvey, Rubén Moreno-Bote, and Jan DrugowitschNature Communications, Jan 2021

Scaling of sensory information in large neural populations shows signatures of information-limiting correlationsMohammadMehdi Kafashan, Anna W. Jaffe, Selmaan N. Chettih, Ramon Nogueira, Iñigo Arandia-Romero, Christopher D. Harvey, Rubén Moreno-Bote, and Jan DrugowitschNature Communications, Jan 2021How is information distributed across large neuronal populations within a given brain area? Information may be distributed roughly evenly across neuronal populations, so that total information scales linearly with the number of recorded neurons. Alternatively, the neural code might be highly redundant, meaning that total information saturates. Here we investigate how sensory information about the direction of a moving visual stimulus is distributed across hundreds of simultaneously recorded neurons in mouse primary visual cortex. We show that information scales sublinearly due to correlated noise in these populations. We compartmentalized noise correlations into information-limiting and nonlimiting components, then extrapolate to predict how information grows with even larger neural populations. We predict that tens of thousands of neurons encode 95% of the information about visual stimulus direction, much less than the number of neurons in primary visual cortex. These findings suggest that the brain uses a widely distributed, but nonetheless redundant code that supports recovering most sensory information from smaller subpopulations.

@article{natcomms2021a, title = {Scaling of sensory information in large neural populations shows signatures of information-limiting correlations}, author = {Kafashan, MohammadMehdi and Jaffe, Anna W. and Chettih, Selmaan N. and Nogueira, Ramon and Arandia-Romero, Iñigo and Harvey, Christopher D. and Moreno-Bote, Rubén and Drugowitsch, Jan}, volume = {12}, issue = {473}, year = {2021}, month = jan, pages = {1-16}, doi = {10.1038/s41467-020-20722-y}, url = {https://doi.org/10.1038/s41467-020-20722-y}, journal = {Nature Communications}, data = {https://doi.org/10.6084/m9.figshare.13274951}, }

2020

- TINS

Distributional Reinforcement Learning in the BrainAdam S. Lowet, Qiao Zheng, Sara Matias, Jan Drugowitsch, and Naoshige UchidaTrends in Neurosciences, Oct 2020

Distributional Reinforcement Learning in the BrainAdam S. Lowet, Qiao Zheng, Sara Matias, Jan Drugowitsch, and Naoshige UchidaTrends in Neurosciences, Oct 2020Learning about rewards and punishments is critical for survival. Classical studies have demonstrated an impressive correspondence between the firing of dopamine neurons in the mammalian midbrain and the reward prediction errors of reinforcement learning algorithms, which express the difference between actual reward and predicted mean reward. However, it may be advantageous to learn not only the mean but also the complete distribution of potential rewards. Recent advances in machine learning have revealed a biologically plausible set of algorithms for reconstructing this reward distribution from experience. Here, we review the mathematical foundations of these algorithms as well as initial evidence for their neurobiological implementation. We conclude by highlighting outstanding questions regarding the circuit computation and behavioral readout of these distributional codes.

@article{tins2020, title = {Distributional Reinforcement Learning in the Brain}, journal = {Trends in Neurosciences}, volume = {43}, number = {12}, pages = {980-997}, year = {2020}, month = oct, issn = {0166-2236}, doi = {https://doi.org/10.1016/j.tins.2020.09.004}, url = {https://www.sciencedirect.com/science/article/pii/S0166223620301983}, author = {Lowet, Adam S. and Zheng, Qiao and Matias, Sara and Drugowitsch, Jan and Uchida, Naoshige}, keywords = {dopamine, population coding, reward, deep neural networks, machine learning, artificial intelligence}, } - PNAS

Hierarchical structure is employed by humans during visual motion perceptionJohannes Bill, Hrag Pailian, Samuel J. Gershman, and Jan DrugowitschProceedings of the National Academy of Sciences, Sep 2020

Hierarchical structure is employed by humans during visual motion perceptionJohannes Bill, Hrag Pailian, Samuel J. Gershman, and Jan DrugowitschProceedings of the National Academy of Sciences, Sep 2020In the real world, complex dynamic scenes often arise from the composition of simpler parts. The visual system exploits this structure by hierarchically decomposing dynamic scenes: When we see a person walking on a train or an animal running in a herd, we recognize the individual’s movement as nested within a reference frame that is, itself, moving. Despite its ubiquity, surprisingly little is understood about the computations underlying hierarchical motion perception. To address this gap, we developed a class of stimuli that grant tight control over statistical relations among object velocities in dynamic scenes. We first demonstrate that structured motion stimuli benefit human multiple object tracking performance. Computational analysis revealed that the performance gain is best explained by human participants making use of motion relations during tracking. A second experiment, using a motion prediction task, reinforced this conclusion and provided fine-grained information about how the visual system flexibly exploits motion structure.

@article{pnas2020b, author = {Bill, Johannes and Pailian, Hrag and Gershman, Samuel J. and Drugowitsch, Jan}, title = {Hierarchical structure is employed by humans during visual motion perception}, journal = {Proceedings of the National Academy of Sciences}, volume = {117}, number = {39}, pages = {24581-24589}, year = {2020}, month = sep, doi = {10.1073/pnas.2008961117}, url = {https://www.pnas.org/doi/abs/10.1073/pnas.2008961117}, data = {https://doi.org/10.6084/m9.figshare.9856271.v1}, } - PNAS

Heuristics and optimal solutions to the breadth–depth dilemmaRubén Moreno-Bote, Jorge Ramírez-Ruiz, Jan Drugowitsch, and Benjamin Y. HaydenProceedings of the National Academy of Sciences, Aug 2020

Heuristics and optimal solutions to the breadth–depth dilemmaRubén Moreno-Bote, Jorge Ramírez-Ruiz, Jan Drugowitsch, and Benjamin Y. HaydenProceedings of the National Academy of Sciences, Aug 2020In multialternative risky choice, we are often faced with the opportunity to allocate our limited information-gathering capacity between several options before receiving feedback. In such cases, we face a natural trade-off between breadth—spreading our capacity across many options—and depth—gaining more information about a smaller number of options. Despite its broad relevance to daily life, including in many naturalistic foraging situations, the optimal strategy in the breadth–depth trade-off has not been delineated. Here, we formalize the breadth–depth dilemma through a finite-sample capacity model. We find that, if capacity is small (∼10 samples), it is optimal to draw one sample per alternative, favoring breadth. However, for larger capacities, a sharp transition is observed, and it becomes best to deeply sample a very small fraction of alternatives, which roughly decreases with the square root of capacity. Thus, ignoring most options, even when capacity is large enough to shallowly sample all of them, is a signature of optimal behavior. Our results also provide a rich casuistic for metareasoning in multialternative decisions with bounded capacity using close-to-optimal heuristics.

@article{pnas2020a, author = {Moreno-Bote, Rubén and Ramírez-Ruiz, Jorge and Drugowitsch, Jan and Hayden, Benjamin Y.}, title = {Heuristics and optimal solutions to the breadth–depth dilemma}, journal = {Proceedings of the National Academy of Sciences}, volume = {117}, number = {33}, pages = {19799-19808}, year = {2020}, month = aug, doi = {10.1073/pnas.2004929117}, url = {https://www.pnas.org/doi/abs/10.1073/pnas.2004929117}, } - Science

Meissner corpuscles and their spatially intermingled afferents underlie gentle touch perceptionNicole L. Neubarth, Alan J. Emanuel, Yin Liu, Mark W. Springel, Annie Handler, Qiyu Zhang, Brendan P. Lehnert, Chong Guo, and 11 more authorsScience, Jun 2020

Meissner corpuscles and their spatially intermingled afferents underlie gentle touch perceptionNicole L. Neubarth, Alan J. Emanuel, Yin Liu, Mark W. Springel, Annie Handler, Qiyu Zhang, Brendan P. Lehnert, Chong Guo, and 11 more authorsScience, Jun 2020The Meissner corpuscle, a mechanosensory end organ, was discovered more than 165 years ago and has since been found in the glabrous skin of all mammals, including that on human fingertips. Although prominently featured in textbooks, the function of the Meissner corpuscle is unknown. Neubarth et al. generated adult mice without Meissner corpuscles and used them to show that these corpuscles alone mediate behavioral responses to, and perception of, gentle forces (see the Perspective by Marshall and Patapoutian). Each Meissner corpuscle is innervated by two molecularly distinct, yet physiologically similar, mechanosensory neurons. These two neuronal subtypes are developmentally interdependent and their endings are intertwined within the corpuscle. Both Meissner mechanosensory neuron subtypes are homotypically tiled, ensuring uniform and complete coverage of the skin, yet their receptive fields are overlapping and offset with respect to each other. Science, this issue p. eabb2751; see also p. 1311 Light touch perception and fine sensorimotor control arise from spatially overlapping mechanoreceptors of the Meissner corpuscle. Meissner corpuscles are mechanosensory end organs that densely occupy mammalian glabrous skin. We generated mice that selectively lacked Meissner corpuscles and found them to be deficient in both perceiving the gentlest detectable forces acting on glabrous skin and fine sensorimotor control. We found that Meissner corpuscles are innervated by two mechanoreceptor subtypes that exhibit distinct responses to tactile stimuli. The anatomical receptive fields of these two mechanoreceptor subtypes homotypically tile glabrous skin in a manner that is offset with respect to one another. Electron microscopic analysis of the two Meissner afferents within the corpuscle supports a model in which the extent of lamellar cell wrappings of mechanoreceptor endings determines their force sensitivity thresholds and kinetic properties.

@article{science2020, author = {Neubarth, Nicole L. and Emanuel, Alan J. and Liu, Yin and Springel, Mark W. and Handler, Annie and Zhang, Qiyu and Lehnert, Brendan P. and Guo, Chong and Orefice, Lauren L. and Abdelaziz, Amira and DeLisle, Michelle M. and Iskols, Michael and Rhyins, Julia and Kim, Soo J. and Cattel, Stuart J. and Regehr, Wade and Harvey, Christopher D. and Drugowitsch, Jan and Ginty, David D.}, title = {Meissner corpuscles and their spatially intermingled afferents underlie gentle touch perception}, journal = {Science}, volume = {368}, number = {6497}, pages = {eabb2751}, year = {2020}, month = jun, doi = {10.1126/science.abb2751}, url = {https://www.science.org/doi/abs/10.1126/science.abb2751}, } - NatComms

The impact of learning on perceptual decisions and its implication for speed-accuracy tradeoffsAndré G. Mendonça, Jan Drugowitsch, M. Inês Vicente, Eric E. J. DeWitt, Alexandre Pouget, and Zachary F. MainenNature Communications, Jun 2020

The impact of learning on perceptual decisions and its implication for speed-accuracy tradeoffsAndré G. Mendonça, Jan Drugowitsch, M. Inês Vicente, Eric E. J. DeWitt, Alexandre Pouget, and Zachary F. MainenNature Communications, Jun 2020The Meissner corpuscle, a mechanosensory end organ, was discovered more than 165 years ago and has since been found in the glabrous skin of all mammals, including that on human fingertips. Although prominently featured in textbooks, the function of the Meissner corpuscle is unknown. Neubarth et al. generated adult mice without Meissner corpuscles and used them to show that these corpuscles alone mediate behavioral responses to, and perception of, gentle forces (see the Perspective by Marshall and Patapoutian). Each Meissner corpuscle is innervated by two molecularly distinct, yet physiologically similar, mechanosensory neurons. These two neuronal subtypes are developmentally interdependent and their endings are intertwined within the corpuscle. Both Meissner mechanosensory neuron subtypes are homotypically tiled, ensuring uniform and complete coverage of the skin, yet their receptive fields are overlapping and offset with respect to each other. Science, this issue p. eabb2751; see also p. 1311 Light touch perception and fine sensorimotor control arise from spatially overlapping mechanoreceptors of the Meissner corpuscle. Meissner corpuscles are mechanosensory end organs that densely occupy mammalian glabrous skin. We generated mice that selectively lacked Meissner corpuscles and found them to be deficient in both perceiving the gentlest detectable forces acting on glabrous skin and fine sensorimotor control. We found that Meissner corpuscles are innervated by two mechanoreceptor subtypes that exhibit distinct responses to tactile stimuli. The anatomical receptive fields of these two mechanoreceptor subtypes homotypically tile glabrous skin in a manner that is offset with respect to one another. Electron microscopic analysis of the two Meissner afferents within the corpuscle supports a model in which the extent of lamellar cell wrappings of mechanoreceptor endings determines their force sensitivity thresholds and kinetic properties.

@article{natcomms2020, author = {Mendonça, André G. and Drugowitsch, Jan and Vicente, M. Inês and DeWitt, Eric E. J. and Pouget, Alexandre and Mainen, Zachary F.}, title = {The impact of learning on perceptual decisions and its implication for speed-accuracy tradeoffs}, journal = {Nature Communications}, volume = {11}, number = {2757}, pages = {1--15}, year = {2020}, doi = {10.1038/s41467-020-16196-7}, url = {https://doi.org/10.1038/s41467-020-16196-7}, eprint = {https://doi.org/10.1038/s41467-020-16196-7}, } - NeurIPS

Adaptation Properties Allow Identification of Optimized Neural CodesLuke Rast, and Jan DrugowitschIn Advances in Neural Information Processing Systems, Jun 2020

Adaptation Properties Allow Identification of Optimized Neural CodesLuke Rast, and Jan DrugowitschIn Advances in Neural Information Processing Systems, Jun 2020The adaptation of neural codes to the statistics of their environment is well captured by efficient coding approaches. Here we solve an inverse problem: characterizing the objective and constraint functions that efficient codes appear to be optimal for, on the basis of how they adapt to different stimulus distributions. We formulate a general efficient coding problem, with flexible objective and constraint functions and minimal parametric assumptions. Solving special cases of this model, we provide solutions to broad classes of Fisher information-based efficient coding problems, generalizing a wide range of previous results. We show that different objective function types impose qualitatively different adaptation behaviors, while constraints enforce characteristic deviations from classic efficient coding signatures. Despite interaction between these effects, clear signatures emerge for both unconstrained optimization problems and information-maximizing objective functions. Asking for a fixed-point of the neural code adaptation, we find an objective-independent characterization of constraints on the neural code. We use this result to propose an experimental paradigm that can characterize both the objective and constraint functions that an observed code appears to be optimized for.

@inproceedings{neurips2020, author = {Rast, Luke and Drugowitsch, Jan}, booktitle = {Advances in Neural Information Processing Systems}, editor = {Larochelle, H. and Ranzato, M. and Hadsell, R. and Balcan, M.F. and Lin, H.}, pages = {1142--1152}, publisher = {Curran Associates, Inc.}, title = {Adaptation Properties Allow Identification of Optimized Neural Codes}, volume = {33}, year = {2020}, }

2019

- PNAS

Learning optimal decisions with confidenceJan Drugowitsch, André G. Mendonça, Zachary F. Mainen, and Alexandre PougetProceedings of the National Academy of Sciences, Nov 2019

Learning optimal decisions with confidenceJan Drugowitsch, André G. Mendonça, Zachary F. Mainen, and Alexandre PougetProceedings of the National Academy of Sciences, Nov 2019Diffusion decision models (DDMs) are immensely successful models for decision making under uncertainty and time pressure. In the context of perceptual decision making, these models typically start with two input units, organized in a neuron–antineuron pair. In contrast, in the brain, sensory inputs are encoded through the activity of large neuronal populations. Moreover, while DDMs are wired by hand, the nervous system must learn the weights of the network through trial and error. There is currently no normative theory of learning in DDMs and therefore no theory of how decision makers could learn to make optimal decisions in this context. Here, we derive such a rule for learning a near-optimal linear combination of DDM inputs based on trial-by-trial feedback. The rule is Bayesian in the sense that it learns not only the mean of the weights but also the uncertainty around this mean in the form of a covariance matrix. In this rule, the rate of learning is proportional (respectively, inversely proportional) to confidence for incorrect (respectively, correct) decisions. Furthermore, we show that, in volatile environments, the rule predicts a bias toward repeating the same choice after correct decisions, with a bias strength that is modulated by the previous choice’s difficulty. Finally, we extend our learning rule to cases for which one of the choices is more likely a priori, which provides insights into how such biases modulate the mechanisms leading to optimal decisions in diffusion models.

@article{pnas2019, author = {Drugowitsch, Jan and Mendonça, André G. and Mainen, Zachary F. and Pouget, Alexandre}, title = {Learning optimal decisions with confidence}, journal = {Proceedings of the National Academy of Sciences}, volume = {116}, number = {49}, pages = {24872-24880}, year = {2019}, month = nov, doi = {10.1073/pnas.1906787116}, url = {https://www.pnas.org/doi/abs/10.1073/pnas.1906787116}, } - PhysRevE

Family of closed-form solutions for two-dimensional correlated diffusion processesHaozhe Shan, Rubén Moreno-Bote, and Jan DrugowitschPhysical Review E, Sep 2019

Family of closed-form solutions for two-dimensional correlated diffusion processesHaozhe Shan, Rubén Moreno-Bote, and Jan DrugowitschPhysical Review E, Sep 2019Diffusion processes with boundaries are models of transport phenomena with wide applicability across many fields. These processes are described by their probability density functions (PDFs), which often obey Fokker-Planck equations (FPEs). While obtaining analytical solutions is often possible in the absence of boundaries, obtaining closed-form solutions to the FPE is more challenging once absorbing boundaries are present. As a result, analyses of these processes have largely relied on approximations or direct simulations. In this paper, we studied two-dimensional, time-homogeneous, spatially correlated diffusion with linear, axis-aligned, absorbing boundaries. Our main result is the explicit construction of a full family of closed-form solutions for their PDFs using the method of images. We found that such solutions can be built if and only if the correlation coefficient ρ between the two diffusing processes takes one of a numerable set of values. Using a geometric argument, we derived the complete set of ρ’s where such solutions can be found. Solvable ρ’s are given by ρ=−cos(π/k), where k ∈ Z+ ∪ +∞. Solutions were validated in simulations. Qualitative behaviors of the process appear to vary smoothly over ρ, allowing extrapolation from our solutions to cases with unsolvable ρ’s.

@article{physreve2019, title = {Family of closed-form solutions for two-dimensional correlated diffusion processes}, author = {Shan, Haozhe and Moreno-Bote, Rub\'en and Drugowitsch, Jan}, journal = {Physical Review E}, volume = {100}, issue = {3}, pages = {032132}, numpages = {10}, year = {2019}, month = sep, publisher = {American Physical Society}, doi = {10.1103/PhysRevE.100.032132}, url = {https://link.aps.org/doi/10.1103/PhysRevE.100.032132}, } - Neuron

Control of Synaptic Specificity by Establishing a Relative Preference for Synaptic PartnersChundi Xu, Emma Theisen, Ryan Maloney, Jing Peng, Ivan Santiago, Clarence Yapp, Zachary Werkhoven, Elijah Rumbaut, and 11 more authorsNeuron, Sep 2019

Control of Synaptic Specificity by Establishing a Relative Preference for Synaptic PartnersChundi Xu, Emma Theisen, Ryan Maloney, Jing Peng, Ivan Santiago, Clarence Yapp, Zachary Werkhoven, Elijah Rumbaut, and 11 more authorsNeuron, Sep 2019The ability of neurons to identify correct synaptic partners is fundamental to the proper assembly and function of neural circuits. Relative to other steps in circuit formation such as axon guidance, our knowledge of how synaptic partner selection is regulated is severely limited. Drosophila Dpr and DIP immunoglobulin superfamily (IgSF) cell-surface proteins bind heterophilically and are expressed in a complementary manner between synaptic partners in the visual system. Here, we show that in the lamina, DIP mis-expression is sufficient to promote synapse formation with Dpr-expressing neurons and that disrupting DIP function results in ectopic synapse formation. These findings indicate that DIP proteins promote synapses to form between specific cell types and that in their absence, neurons synapse with alternative partners. We propose that neurons have the capacity to synapse with a broad range of cell types and that synaptic specificity is achieved by establishing a preference for specific partners.

@article{neuron2019, title = {Control of Synaptic Specificity by Establishing a Relative Preference for Synaptic Partners}, journal = {Neuron}, volume = {103}, number = {5}, pages = {865-877.e7}, year = {2019}, month = sep, issn = {0896-6273}, doi = {10.1016/j.neuron.2019.06.006}, url = {https://www.sciencedirect.com/science/article/pii/S0896627319305562}, author = {Xu, Chundi and Theisen, Emma and Maloney, Ryan and Peng, Jing and Santiago, Ivan and Yapp, Clarence and Werkhoven, Zachary and Rumbaut, Elijah and Shum, Bryan and Tarnogorska, Dorota and Borycz, Jolanta and Tan, Liming and Courgeon, Maximilien and Griffin, Tessa and Levin, Raina and Meinertzhagen, Ian A. and {de Bivort}, Benjamin and Drugowitsch, Jan and Pecot, Matthew Y.}, keywords = {synaptic specificity, synapse formation, cell recognition molecules, visual system, Dpr and DIP proteins}, } - NatNeuro

Optimal policy for multi-alternative decisionsSatohiro Tajima, Jan Drugowitsch, Nisheet Patel, and Alexandre PougetNature Neuroscience, Sep 2019

Optimal policy for multi-alternative decisionsSatohiro Tajima, Jan Drugowitsch, Nisheet Patel, and Alexandre PougetNature Neuroscience, Sep 2019Everyday decisions frequently require choosing among multiple alternatives. Yet the optimal policy for such decisions is unknown. Here we derive the normative policy for general multi-alternative decisions. This strategy requires evidence accumulation to nonlinear, time-dependent bounds that trigger choices. A geometric symmetry in those boundaries allows the optimal strategy to be implemented by a simple neural circuit involving normalization with fixed decision bounds and an urgency signal. The model captures several key features of the response of decision-making neurons as well as the increase in reaction time as a function of the number of alternatives, known as Hick’s law. In addition, we show that in the presence of divisive normalization and internal variability, our model can account for several so-called ‘irrational’ behaviors, such as the similarity effect as well as the violation of both the independence of irrelevant alternatives principle and the regularity principle.

@article{natneuro2019, title = {Optimal policy for multi-alternative decisions}, author = {Tajima, Satohiro and Drugowitsch, Jan and Patel, Nisheet and Pouget, Alexandre}, journal = {Nature Neuroscience}, volume = {22}, pages = {1503--1511}, year = {2019}, month = sep, doi = {10.1038/s41593-019-0453-9}, url = {https://doi.org/10.1038/s41593-019-0453-9}, } - JOSS

VBLinLogit: Variational Bayesian linear and logistic regressionJan DrugowitschJournal of Open Source Software, Sep 2019

VBLinLogit: Variational Bayesian linear and logistic regressionJan DrugowitschJournal of Open Source Software, Sep 2019@article{joss2019, doi = {10.21105/joss.01359}, url = {https://doi.org/10.21105/joss.01359}, year = {2019}, publisher = {The Open Journal}, volume = {4}, number = {38}, pages = {1359}, author = {Drugowitsch, Jan}, title = {VBLinLogit: Variational Bayesian linear and logistic regression}, journal = {Journal of Open Source Software}, } - NatComms

Prefrontal mechanisms combining rewards and beliefs in human decision-makingMarion Rouault, Jan Drugowitsch, and Etienne KoechlinNature Communications, Jan 2019

Prefrontal mechanisms combining rewards and beliefs in human decision-makingMarion Rouault, Jan Drugowitsch, and Etienne KoechlinNature Communications, Jan 2019In uncertain and changing environments, optimal decision-making requires integrating reward expectations with probabilistic beliefs about reward contingencies. Little is known, however, about how the prefrontal cortex (PFC), which subserves decision-making, combines these quantities. Here, using computational modelling and neuroimaging, we show that the ventromedial PFC encodes both reward expectations and proper beliefs about reward contingencies, while the dorsomedial PFC combines these quantities and guides choices that are at variance with those predicted by optimal decision theory: instead of integrating reward expectations with beliefs, the dorsomedial PFC built context-dependent reward expectations commensurable to beliefs and used these quantities as two concurrent appetitive components, driving choices. This neural mechanism accounts for well-known risk aversion effects in human decision-making. The results reveal that the irrationality of human choices commonly theorized as deriving from optimal computations over false beliefs, actually stems from suboptimal neural heuristics over rational beliefs about reward contingencies.

@article{natcomms2019, title = {Prefrontal mechanisms combining rewards and beliefs in human decision-making}, author = {Rouault, Marion and Drugowitsch, Jan and Koechlin, Etienne}, journal = {Nature Communications}, volume = {10}, pages = {301}, year = {2019}, month = jan, doi = {10.1038/s41467-018-08121-w}, url = {https://doi.org/10.1038/s41467-018-08121-w}, }

2017

- NatComms

Lateral orbitofrontal cortex anticipates choices and integrates prior with current informationRamon Nogueira, Juan M. Abolafia, Jan Drugowitsch, Emili Balaguer-Ballester, Maria V. Sanchez-Vives, and Rubén Moreno-BoteNature Communications, Mar 2017